Given you are building a CI/CD Kubernetes cluster and you need some Persistent Volume, using a cloud storage sounds too expensive especially when you have 150Gb of local disks across your nodes.

In my case, I’m using the DigitalOcean Kubernetes product with 3 nodes having 50Gb of disk each for $10 per nodes per months with a total of $30 per months.

In this article I’m exploring Rook which is a cloud storage orchestration. The first and most stable cloud storage driver is the Ceph one, so I’m using it here, but it shouldn’t be too different for the other available drivers.

So let’s see what we can get from this.

In this article I’m assuming you have a k8s cluster and the kubectl command is configured correctly to access your cluster.

Rook deployment

Rook operator

The rook operator is reponsible to discover disks and create the volumes.

The rook team is providing an Helm chart to install it so let’s follow the instructions from the documentation in order to install the stable version :

1

2

3

4

5

6

$ helm init

$ kubectl --namespace kube-system create sa tiller

$ kubectl create clusterrolebinding tiller --clusterrole cluster-admin --serviceaccount=kube-system:tiller

$ kubectl --namespace kube-system patch deploy/tiller-deploy -p '{"spec": {"template": {"spec": {"serviceAccountName": "tiller"}}}}'

$ helm repo add rook-stable https://charts.rook.io/stable

$ helm install --namespace rook-ceph-system rook-stable/rook-ceph

Now that the operator is deployed, we have to deploy rook cluster.

Rook cluster

The rook cluster deployement consist of creating a rook-ceph namespace, some ServiceAccount and Roles/RoleBindings and finally a CephCluster.

We will also enable the Ceph dashboard without the SSL/TLS so that we will be able to access it locally using the Kubernetes proxy.

First of all, have a look at the Ceph Docker tags in order to get the latest one and create the following rook-cluster.yaml file :

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

apiVersion: v1

kind: Namespace

metadata:

name: rook-ceph

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rook-ceph-osd

namespace: rook-ceph

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rook-ceph-mgr

namespace: rook-ceph

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-osd

namespace: rook-ceph

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: [ "get", "list", "watch", "create", "update", "delete" ]

---

# Aspects of ceph-mgr that require access to the system namespace

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-mgr-system

namespace: rook-ceph

rules:

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- list

- watch

---

# Aspects of ceph-mgr that operate within the cluster's namespace

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-mgr

namespace: rook-ceph

rules:

- apiGroups:

- ""

resources:

- pods

- services

verbs:

- get

- list

- watch

- apiGroups:

- batch

resources:

- jobs

verbs:

- get

- list

- watch

- create

- update

- delete

- apiGroups:

- ceph.rook.io

resources:

- "*"

verbs:

- "*"

---

# Allow the operator to create resources in this cluster's namespace

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-cluster-mgmt

namespace: rook-ceph

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: rook-ceph-cluster-mgmt

subjects:

- kind: ServiceAccount

name: rook-ceph-system

namespace: rook-ceph-system

---

# Allow the osd pods in this namespace to work with configmaps

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-osd

namespace: rook-ceph

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rook-ceph-osd

subjects:

- kind: ServiceAccount

name: rook-ceph-osd

namespace: rook-ceph

---

# Allow the ceph mgr to access the cluster-specific resources necessary for the mgr modules

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-mgr

namespace: rook-ceph

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rook-ceph-mgr

subjects:

- kind: ServiceAccount

name: rook-ceph-mgr

namespace: rook-ceph

---

# Allow the ceph mgr to access the rook system resources necessary for the mgr modules

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-mgr-system

namespace: rook-ceph-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rook-ceph-mgr-system

subjects:

- kind: ServiceAccount

name: rook-ceph-mgr

namespace: rook-ceph

---

# Allow the ceph mgr to access cluster-wide resources necessary for the mgr modules

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-mgr-cluster

namespace: rook-ceph

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: rook-ceph-mgr-cluster

subjects:

- kind: ServiceAccount

name: rook-ceph-mgr

namespace: rook-ceph

---

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

# For the latest ceph images, see https://hub.docker.com/r/ceph/ceph/tags

image: ceph/ceph:v13.2.3-20190109

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: true

dashboard:

enabled: true

port: 8080

ssl: false

storage:

useAllNodes: true

useAllDevices: false

config:

databaseSizeMB: "1024"

journalSizeMB: "1024"

Deploy it :

1

$ kubectl apply -f rook-cluster.yaml

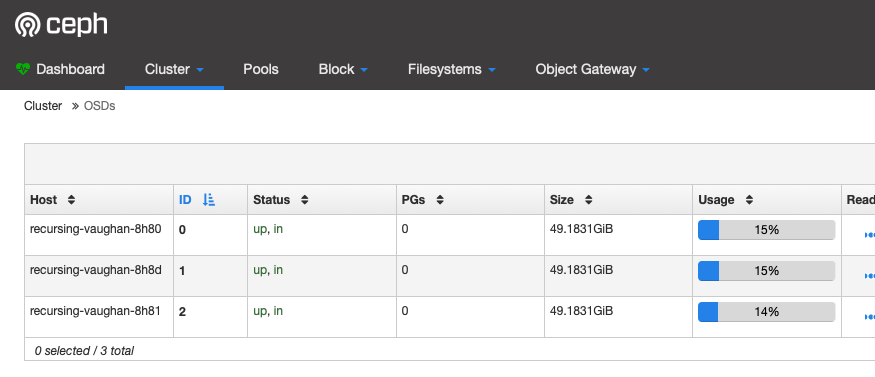

It will take some time to get the nodes discovery and all the rook pods up and running, so follow up using the kubectl get pods --namespace=rook-ceph command.

Your cluster is ready when you have a mon and an osd pod per nodes, and a mgr pod :

1

2

3

4

5

6

7

8

9

$ kubectl get pods --namespace=rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-mgr-a-7cbd67c79b-lrhrk 1/1 Running 0 2m42s

rook-ceph-mon-a-56579fbc78-qs52z 1/1 Running 0 4m11s

rook-ceph-mon-b-7b4c8f48dd-vvk2h 1/1 Running 0 3m42s

rook-ceph-mon-c-5688b75489-ng7l7 1/1 Running 0 3m12s

rook-ceph-osd-0-5f4c54c69b-7dr27 1/1 Running 0 89s

rook-ceph-osd-1-769f54d9f6-h699t 1/1 Running 0 88s

rook-ceph-osd-2-868dfcdf69-gqbx8 1/1 Running 0 89s

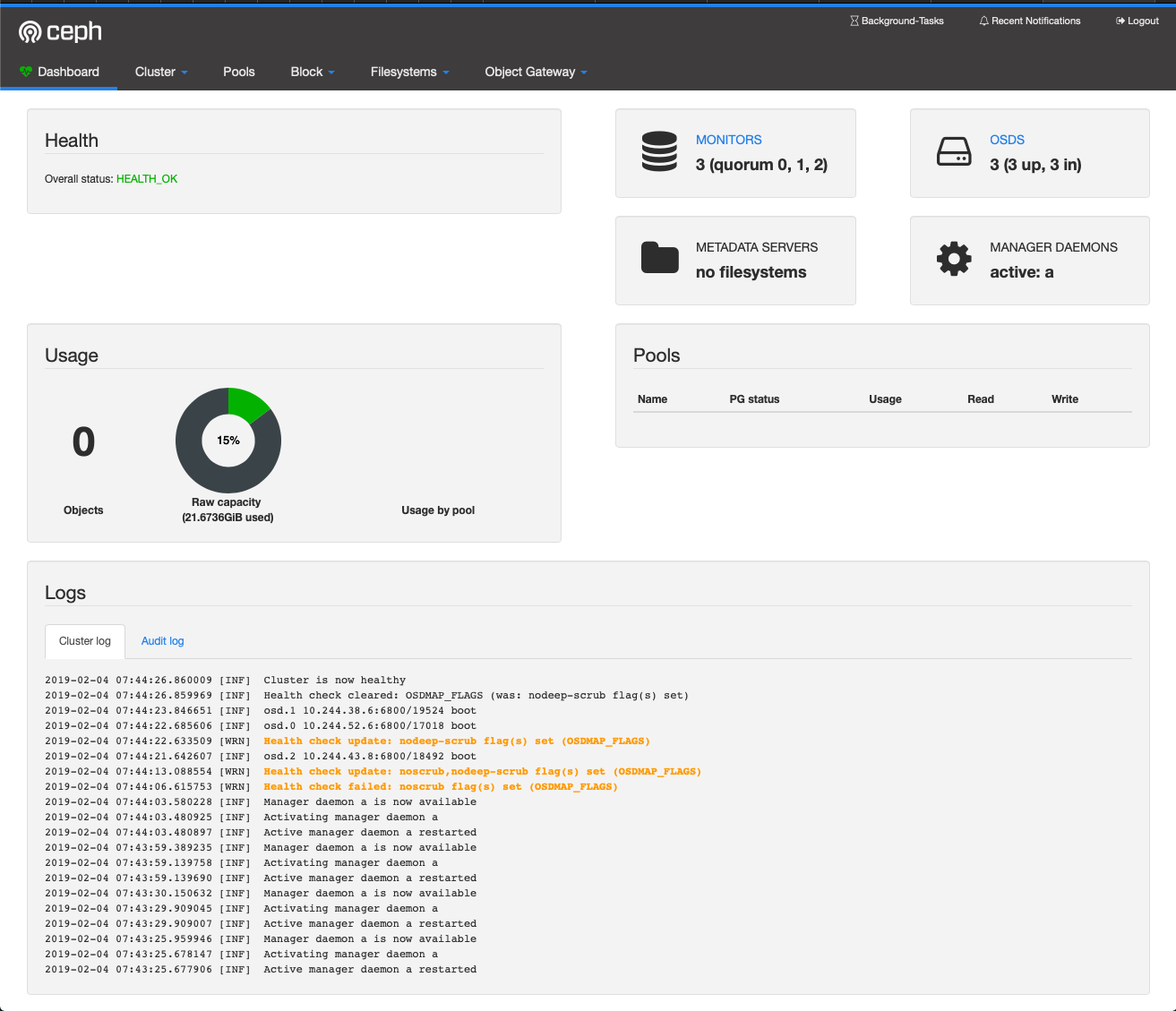

Ceph Dashboard

Now you can access the Ceph dashboard :

- Start the Kubernetes proxy with

kubectl proxy - Open the following URL : http://localhost:8001/api/v1/namespaces/rook-ceph/services/rook-ceph-mgr-dashboard:https-dashboard/proxy

To get the login and password, use the following:

1

2

$ kubectl logs rook-ceph-mgr-a-7cbd67c79b-lrhrk --namespace=rook-ceph | grep "dashboard set-login-credentials"

2019-02-04 07:43:20.128 7f89f4286700 0 log_channel(audit) log [DBG] : from='client.14126 10.244.38.0:0/3232824830' entity='client.admin' cmd=[{"username": "admin", "prefix": "dashboard set-login-credentials", "password": "Yb2V1yjHmm", "target": ["mgr", ""], "format": "json"}]: dispatch

You can see here that the login is admin and password Yb2V1yjHmm.

Kubernetes StorageClass

Now we have to tell Kubernetes about the Rook StorageClass so that we will be able to create Volumes.

Deploy the StorageClass

Create a rook-storage-class.yaml file with the following :

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: replicapool

namespace: rook-ceph

spec:

failureDomain: host

replicated:

size: 3

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-ceph-block

provisioner: ceph.rook.io/block

parameters:

blockPool: replicapool

# Specify the namespace of the rook cluster from which to create volumes.

# If not specified, it will use `rook` as the default namespace of the cluster.

# This is also the namespace where the cluster will be

clusterNamespace: rook-ceph

# Specify the filesystem type of the volume. If not specified, it will use `ext4`.

# fstype: xfs

# (Optional) Specify an existing Ceph user that will be used for mounting storage with this StorageClass.

#mountUser: user1

# (Optional) Specify an existing Kubernetes secret name containing just one key holding the Ceph user secret.

# The secret must exist in each namespace(s) where the storage will be consumed.

#mountSecret: ceph-user1-secret

reclaimPolicy: Retain

(I have commented the fstype attribute as DigitalOcean doesn’t support XFS)

And deploy it :

1

$ kubectl apply -f rook-storage-class.yaml

Now check the StorageClasses :

1

2

3

4

$ kubectl get storageclasses

NAME PROVISIONER AGE

do-block-storage (default) dobs.csi.digitalocean.com 108m

rook-ceph-block ceph.rook.io/block 3s

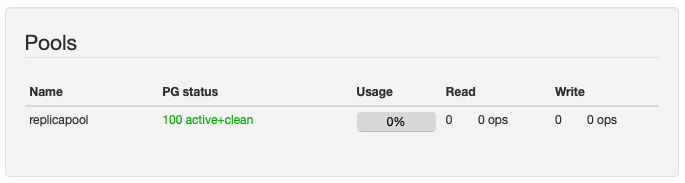

Also, from the Ceph Dashboard, the created Pool is visible :

Defines as a default

The DigitalOcean block storage (made with their CSI Driver) is the default one, to avoid mistakes, we update the rook-ceph-block StorageClass as the default one as describe in the Kubernetes doc :

1

2

3

4

5

6

7

8

$ kubectl patch storageclass do-block-storage -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"false"}}}'

$ kubectl patch storageclass rook-ceph-block -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

$ kubectl get storageclasses

NAME PROVISIONER AGE

do-block-storage dobs.csi.digitalocean.com 114m

rook-ceph-block (default) ceph.rook.io/block 5m58s

Deploy a PersistentVolumeClaim

To allow a Pod to use a PersistentVolume, we have to create a PersistentVolumeClaim in the new file rook-persistent-volume-claim.yaml :

1

2

3

4

5

6

7

8

9

10

11

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: redis-pv-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: rook-ceph-block

And deploy it :

1

$ kubectl apply -f rook-persistent-volume-claim.yaml

And check it :

1

2

3

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

redis-pv-claim Bound pvc-ce88c79d-2861-11e9-9714-6e4e5abdddf2 5Gi RWO rook-ceph-block 6s

Use the Rook volumes

Let’s deploy a pod requesting a volume. Create the test-pod-volume.yaml file with the following :

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

apiVersion: v1

kind: Pod

metadata:

name: redis

spec:

containers:

- name: redis

image: redis:4.0.5-alpine

resources:

requests:

cpu: "200m"

memory: "64Mi"

volumeMounts:

- name: redis-pv

mountPath: /data/redis

volumes:

- name: redis-pv

persistentVolumeClaim:

claimName: redis-pv-claim

(The redis container will keep it up-and-running so that we will be able to executed commands in the pod)

And deploy it :

1

$ kubectl apply -f test-pod-volume.yaml

Let’s check the PersistentVolumes and PersistentVolumeClaims :

1

2

3

4

5

6

7

$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-5c827a63-285e-11e9-9714-6e4e5abdddf2 5Gi RWO Retain Bound default/redis-pv-claim rook-ceph-block 7m48s

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

redis-pv-claim Bound pvc-5c827a63-285e-11e9-9714-6e4e5abdddf2 5Gi RWO rook-ceph-block 8m20s

To test that everything works fine we will attach to the pod, create a file, delete the pod and re-create it and check the file :

First create a file in the pod :

1

2

3

4

5

6

7

8

9

10

11

$ kubectl exec -it redis /bin/sh

/data # cd redis/

/data/redis # ls -al

total 24

drwxr-xr-x 3 redis root 4096 Feb 4 09:22 .

drwxr-xr-x 3 redis redis 4096 Feb 4 09:22 ..

drwx------ 2 redis root 16384 Feb 4 09:22 lost+found

/data/redis # echo "Please, don't delete me !" > testfile

/data/redis # cat testfile

Please, don't delete me !

/data/redis # %

Now let’s delete the pod and re-create it :

1

2

$ kubectl delete -f test-pod-volume.yaml

$ kubectl apply -f test-pod-volume.yaml

Finally let’s check our file :

1

2

3

4

5

6

7

8

9

10

$ kubectl exec -it redis /bin/sh

/data # cd redis/

/data/redis # ls -al

total 28

drwxr-xr-x 3 redis root 4096 Feb 4 09:23 .

drwxr-xr-x 3 redis redis 4096 Feb 4 09:25 ..

drwx------ 2 redis root 16384 Feb 4 09:22 lost+found

-rw-r--r-- 1 redis root 26 Feb 4 09:23 testfile

/data/redis # cat testfile

Please, don't delete me !

As you can see, the file has been persisted between the pod recreation. 🎉